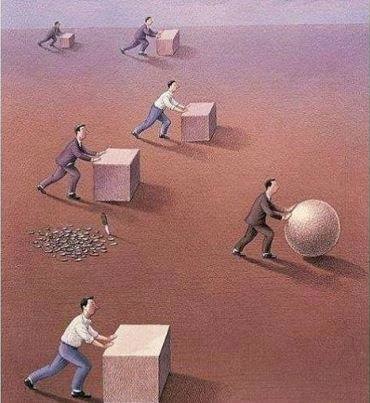

The problem

On previous posts I described how to perform non-linear curve fitting in Ptyhon and Julia. At their core non-linear and linear curve fitting (or regression) are optimization problems in which we find the parameters that minimize an objective function. The entire field of mathematical optimization is concerned with finding the most efficient and accurate methods to minimize such functions.

On the other hand, the current standard to find the optimal values for the parameters of the algorithms used in machine learning is to perform a random search or a grid search throughout the space of the possible values that such parameters can take. These approaches have several limitations:

- They are not computationally efficient for large data sets

- the parameters tested are not informed in any way by the results from the previous step.

The solution

However, the implementation of optimization-driven approaches for scikit-learn is not a trivial matter. Thankfully, James Bergstra and other brave souls have created hyperopt, a Python library for optimizing over awkward search spaces with real-valued, discrete, and conditional dimensions, which makes it ideal for tuning hyper parameters with scikit-learn.

What we need

In order to tune the parameters of scikit-learn estimator, hyperopt needs the following:

1. Data

2. The objective function to be minimized

3. The search space from which to sample the parameters

4. The algorithm to be used for the minimization of the objective function, and the number of time the optimization should be run

Python implementation

#modules

from sklearn.metrics.regression import mean_absolute_error as mae

from sklearn.metrics import make_scorer

from sklearn.model_selection import train_test_split, cross_val_score

from sklearn.ensemble import GradientBoostingRegressor

from hyperopt import hp, fmin, tpe

from hyperopt.pyll import scope

import numpy as np

# hyperopt object for

scope.define(GradientBoostingRegressor)

def train_GradientBoostingRegressor(Xdata, Ydata, loss='ls' ,alpha = 0.50, cv = 2, n_steps = 10):

"""

Trains a Gradient Boosting Regressor using bayesian optimization

Parameters

----------

Xdata: numpy array of size KxN and composed of floating and/or integers

Ydata: numpy array of size K (1D array) of floating

loss: loss function to be optimized.

alpha: quantile for the quantile and hubber loss; floating < 1.0 and > 0.0

CV: K-fold cross-validation size for the training procedure

n_steps: Number of times the `hyperopt` mimizer will run to find the optimal parameters

Returns

-------

Regressor : A sckiki-learn obkect with the trained Gradient Boosting Regressor

"""

#split data

X_train, X_test, y_train, y_test = train_test_split(Xdata, Ydata, test_size=.33, random_state = 42)

# create and objective function

def objective_function_regression(estimator):

mae_array = cross_val_score( estimator, X_train, y_train, cv= cv, n_jobs=-1,

scoring = make_scorer(mae) )

return mae_array.mean()

# search space

n_estimators = hp.randint('n_estimators',1000)

learning_rate = hp.loguniform('learning_rate',-3,1)

max_depth = hp.randint('max_depth', 10)

max_features = hp.randint('max_features',X_train.shape[1])

min_samples_leaf = hp.randint('min_samples_leaf', 10)

criterion = hp.choice('criterion', ['friedman_mse'])

# model / estimator to be optimized

est0 = (0.1, scope.GradientBoostingRegressor( loss = loss,

alpha = alpha,

n_estimators = n_estimators + 1,

learning_rate = learning_rate,

max_depth = max_depth + 1,

max_features = max_features + 1,

min_samples_leaf = min_samples_leaf + 1,

criterion = criterion,

random_state= 101)

)

# search space

search_space_regression = hp.pchoice('estimator', [est0])

print('--'*20)

print('Finding optimal parameters')

# perform the optimization

best = fmin(fn= objective_function_regression,

space= search_space_regression,

algo = tpe.suggest,

max_evals = n_steps,

verbose = 0 # The number of iterations

)

# Allocate optimized parameters and apply to test data set

Regressor = GradientBoostingRegressor( loss = loss, alpha = alpha,

learning_rate = best['learning_rate'],

max_depth = best['max_depth'],

max_features = best['max_features'],

min_samples_leaf = best['min_samples_leaf'],

n_estimators = best['n_estimators'],

random_state = 101

)

# fit

Regressor.fit(X_train,y_train)

#evaluate

yhat = Regressor.predict(X_test) ;

error_pct = np.round( np.median(np.abs(yhat - y_test)), 2)

#print('--'*20)

print(

"{} {}".format('The Median Abs. Error (%) for the test set is :', error_pct)

)

return Regressor, y_test, yhat

Now, we can use the Boston housing data set to test our beautiful code:

from sklearn.datasets import load_boston

from sklearn.model_selection import train_test_split

D= load_boston()

R1, ytest1, yhat1 = train_GradientBoostingRegressor( D.data,

D.target,

loss='quantile',

alpha = 0.50,

n_steps = 50)

I am data scientist scientist, passionate about helping people using mathematics, programming and chemistry